JavaScript is an essential aspect of building engaging sites, but it can drastically affect the way pages load. Like any other file building a web page, JavaScript needs to load before it can function. The size of JavaScript files along with the way these files are delivered both affect the load time. JavaScript is also a dynamic language, and compiles on the fly once the browser receives it. This makes JavaScript processing more costly than processing other file types.

This post is going to cover delivery optimization for JavaScript: why you should care, considerations, and best practices, along with some links to relevant resources. Optimizing JavaScript processing is also incredibly important to the way modern websites load, but I’ll be addressing it in a separate post.

The impact of JS file size

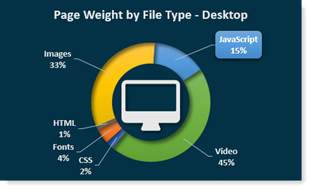

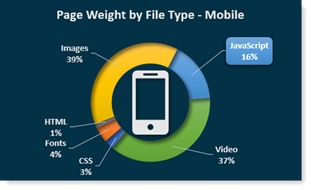

Across the millions of sites measured by HTTP archive, JavaScript accounts for 15% of page weight for desktop pages and 16% for mobile pages. For desktop users, this equates to about 400 KB per page visited, and 360 KB for mobile users. When you consider that the first connection opened to load a page only transfers 14 KB of data, it becomes obvious that reducing JS file size is an important optimization to make.

But 400 KB of JavaScript is not equal to 400 KB of an image loading, partly because JavaScript processing takes place when the file is uncompressed. Byte for byte, JavaScript is more expensive than any other file type to process on the page in terms of CPU and time. When you consider that GZIP compression can reduce delivered text file size by 70 to 90%, 400 KB really becomes 1.3 to 4.0 MB.

Reducing the impact of JS delivery

So how can we reduce how much page loading time is associated with JavaScript delivery? There are two ways, with best practices for each:

- Make JS load faster in the browser.

- Reduce how much JS you use to build your page.

- Minify all JS resources, and use minified third-party JS.

- Self-host third-party JS (or use a service worker).

- Compress JS.

- Use push and preload for critical JS.

- Only load JS when it’s needed.

- Eliminate dead JS code.

- Split JS files to deliver essential components.

- Load non-critical JS components asynchronously.

- Cache JS.

We’ll cover the best practices listed for accomplishing both goals, including considerations for third-party JavaScript that you may not have as much control over.

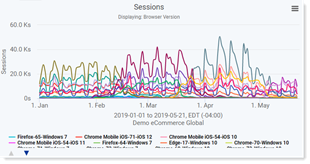

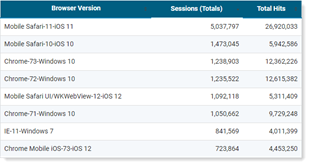

For the following, it’s important to make sure your user base has browser support for the methods implemented. Real User Monitoring lets you see which versions are most common among your users and should be considered essential for testing any changes you make to your site.

Make JS load faster in the browser

Reduce how much JS you use to build the page

If you deliver less JavaScript, the page will load faster.

Not sure where to start? We already know that JS is the most expensive kind of file used to build your site, so you can also think about it in terms of processing. For interactivity on the page, best practice says to use JavaScript for features that enhance user experience – expected transitions, animations, and events – rather than things that can disrupt like flashy, drawn-out animations.

Are there third-party pieces of JavaScript that are redundant, or that aren’t essential to what you want the page to do? Consider eliminating these too.

Google Developers has produced guidelines based on user perceptions of performance delays, which you can use to judge whether JS is necessary on a page in conjunction with testing.

|

User Perception Of Performance Delays |

|

|

0 to 16ms |

Users are exceptionally good at tracking motion, and they dislike it when animations aren't smooth. They perceive animations as smooth so long as 60 new frames are rendered every second. That's 16ms per frame, including the time it takes for the browser to paint the new frame to the screen, leaving an app about 10ms to produce a frame. |

|

0 to 100ms |

Respond to user actions within this time window and users feel like the result is immediate. Any longer, and the connection between action and reaction is broken. |

|

100 to 300ms |

Users experience a slight perceptible delay. |

|

300 to 1000ms |

Within this window, things feel part of a natural and continuous progression of tasks. For most users on the web, loading pages or changing views represents a task. |

|

1000ms or more |

Beyond 1000 milliseconds (1 second), users lose focus on the task they are performing. |

|

10000ms or more |

Beyond 10000 milliseconds (10 seconds), users are frustrated and are likely to abandon tasks. They may or may not come back later. |

Use minified JS files

Minification strips out unnecessary punctuation, white space, and new line characters. True minification also uses obfuscation, which renames variables to names that require fewer bytes of data for the browser to read than human-readable variable names.

There are many minification tools online, not all of which use obfuscation. Consider which is best for your site, and always use some form of minification. The fewer bytes of data you transfer per JavaScript file, the faster it can load, and the quicker the browser can ultimately parse it.

Self-host third-party JS where applicable (or use a service worker)

Hosting third-party JavaScript on your own domain can be less maintainable – you won’t get automatic updates for the script, and changes to the API may occur without warning – but it may still be useful if a script is critical to rendering your page. The script will use your network and security protocols, which can lead to better outcomes. You can also include only the components in libraries that are used in your source code (see “eliminate dead code” below).

An alternative to self-hosting third-party scripts is using a service worker to determine how often a piece of content should be fetched from the network, although this option may not be as accessible as self-hosting for sites with legacy architecture.

Compress JS

Server-side compression of text files can reduce file size substantially. This transferred JavaScript size allows more uncompressed data to download than usual, eliminating the need for further round trips. Compressing JavaScript, along with CSS and other text files, can reduce page weight by half.

Use push and preload for critical JS

Server push and preload can both be used to deliver files that are critical to rendering the page earlier in the page load than normal.

A file is loaded with push when indicated server-side – the server receives a request and has been told beforehand that x, y, and z resources should be downloaded with it. Notably, the push methodology can only be used on HTTP/2.

Preload is a little different, where linked resources can be given the “preload” attribute in the HTML file. As soon as the page gets delivered and as HTML parsing is just beginning, these resources are requested from the server. This is faster than normal HTML parsing, because resources aren’t then requested in the order they are listed in the HTML.

Only load JS when it’s needed

Within each JavaScript file, make sure that only code that gets used is included. Additionally, you may not need to load every JavaScript file on every page of your site. To accomplish these ends, I’m going to cover the optimizations you should know and link to relevant resources.

Eliminate dead code with tree shaking

Tree shaking, or dead code elimination, gets rid of – you guessed it – code that isn’t included on your page. For example, say you’re downloading the minified JQuery library, but you only use a handful of the features offered by JQuery in your own code when building your page. Dead code elimination would allow you to download only the parts of the JQuery library that you’re using.

The term “tree-shaking” was popularized by Rollup, which implements this feature, but it’s also available through webpack and source-map-explorer. Notably, this functionality only works with ES2015 modules, using the syntax to reference JS bundles within other files. If you’re using CommonJS, there are some options available like webpack-common-shake, but you will likely run into limitations with CommonJS. Long-term, if you would like to use tree-shaking, ES2015 or later will be most useful.

Deliver essential components with route-based chunking, or code splitting

Route-based chunking, or code splitting, applies to websites that load single-page applications, progressive web applications, or any other framework that requires a large JS bundle to build the first page that a user sees. Using route-based chunking allows only the necessary chunks of the JS bundle for initial rendering and interactivity to be loaded to the page at first, which reduces the total amount of JavaScript transferred to build the page as well as the time it takes to render meaningful content.

How you determine where to split your code depends on the kind of application you’re loading, who your users are, and what your backend architecture is like. There are a few types of code splitting:

- Vendor splitting separates your JS from JS provided by your framework vendor, like React or Vue. Vendor splitting prevents reloading a larger bundle when a feature in either code source is changed. Vendor splitting should be done in every web application.

- Splitting with entry points separates your code based on where in your code webpack starts to identify dependencies in your application. If your site does not use client-side routing, (e.g., SPA uses client-side routing), using entry points to split your code may make sense. With this kind of splitting, using a tool like Bundle Buddy to ensure that duplicate bundles aren’t included in a dependency tree can help pages load faster.

- Dynamic code splitting works well with applications that use client-side routing, like single-page applications, because not all users will use every piece of functionality on a page. This can be done using the dynamic

import ()statement, which returns a Promise. For that reason, you can also use dynamic imports with async/await. Parcel and webpack will both look for the dynamicimport ()statement to lazy load resources as they’re needed.

Load non-critical JS components asynchronously

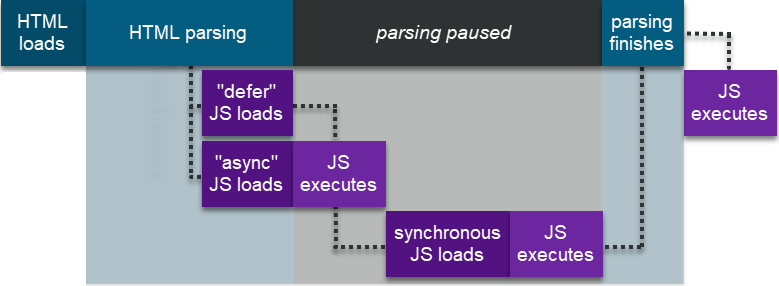

Using the async and defer attributes for external scripts is essential, especially with third-party scripts. Both async and defer are asynchronous for downloading and parsing the resources they mark. Why is that important? For JavaScript, the default behavior for script downloading and parsing is synchronous, meaning that almost every other process on the page stops while these steps are happening.

The async attribute takes precedence over defer in modern browsers, because modern browser engines are better-equipped to handle JavaScript processing. In older browsers, it's the other way around.

Notably, JavaScript execution all happens on the same browser thread. JavaScript marked async will always execute before JavaScript marked defer. Synchronous JavaScript executes immediately and should only be used for code that renders the page (and probably not even then).

Cache JS

Caching can be done several ways and is inter-related with some of the functionality of service workers. What caching allows you to do is determine the rules for when a browser should download a resource from the server instead of getting it much faster, no download required, from the cache.

Caching can substantially reduce load times and delivery costs to the page and should be utilized for JavaScript in almost every circumstance.

Takeaways and the TL;DR

Using features of modern frameworks makes JavaScript more maintainable than ever. While at first glance it can seem convoluted, there are just a couple of goals JavaScript best practices aim for:

- Smaller page sizes

- Faster delivery

- More efficient processing

In this post, we cover the first two, which are interconnected. Smaller page sizes are possible when you reduce the amount of code you deliver to your users, thereby delivering them faster.

The TL;DR

- Eliminating unnecessary JavaScript, minifying and compressing JavaScript, tree shaking, and code splitting all reduce the amount of JavaScript delivered to a page.

- Delivering assets with a service worker and utilizing caching can further control whether or not JavaScript gets downloaded to a page.

- Loading scripts asynchronously, and with preload and push for critical JavaScript, also allow a page to load more quickly.

As always, make sure to test any changes before you implement them, and then see how they affect your user base with real user monitoring. And check back for the next post, which will cover the third aim for JavaScript performance – more efficient JavaScript processing.

<<< Part 1: How to Optimize Images to Improve Web Performance

During the holiday rush, every shopper matters

Optimize the customer journey before the eCommerce event of the year.